Cameras

Stereo cameras

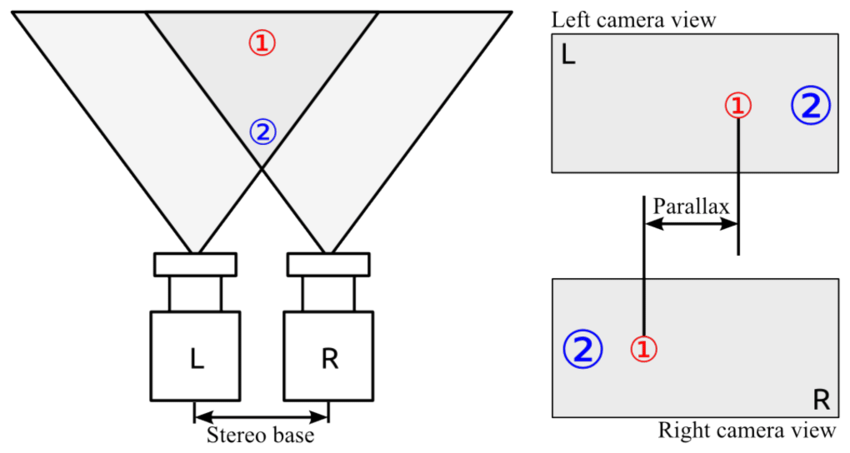

Stereo camera is a pair of cameras that are mounted on the same platform and have parallel optical axes. The distance between the optical axes is called baseline. The baseline is usually measured in millimeters. The baseline is usually in the range of 50-100 mm. The baseline is usually fixed and cannot be changed. The baseline is usually measured from the center of the camera lenses.

Stereo cameras are used to capture 3D information about the scene.

Objects (1,2) in various depth ranges are captured from different camera views. The displacement of the object position from left to right stereo image is called parallax and depends on the object’s distance.

To find distance to the object we need to know the baseline and the parallax. The baseline is fixed and known. The parallax can be measured from the stereo image. The parallax is measured in pixels. The parallax is inversely proportional to the distance to the object. The closer the object the larger the parallax.

Camera parameters

Camera calibration

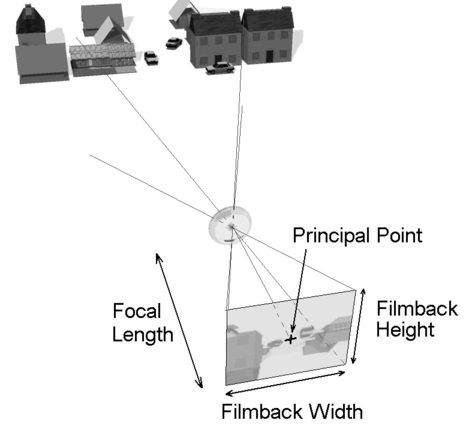

Camera calibration is the process of estimating a camera’s intrinsic and extrinsic parameters to correct distortions and ensure accurate measurements in computer vision tasks. It involves determining factors such as Focal Length, Principal Point, and lens distortion coefficients. To calibrate a camera, we need to take pictures of a calibration pattern from different angles and orientations.

The intrinsic parameters deal with the camera’s internal characteristics, such as, principal point, focal length, distortion

The extrinsic parameters deal with the camera’s position and orientation in the world (rotation matrix and translation vector)

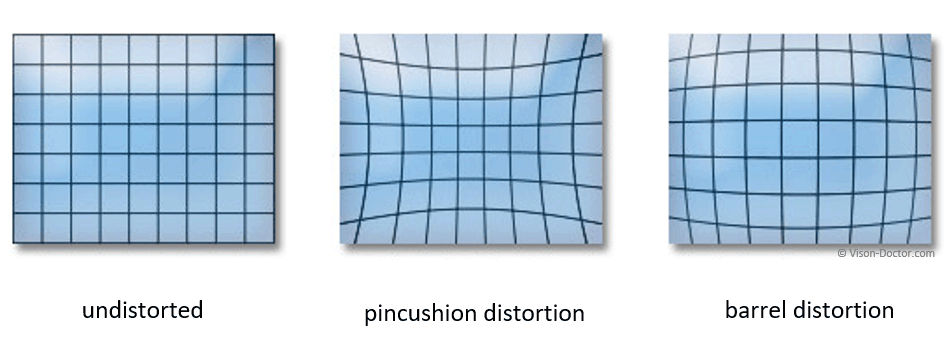

Lens distortion is caused by the lens of the camera. It causes straight lines to appear curved.

Intel RealSense depth cameras (RGBD)

The Intel RealSense Depth Camera D400-Series uses stereo vision to calculate depth.

Intel RealSense SDK 2.0 (librealsense) is a cross-platform library for Intel RealSense depth cameras (D400 & L500 series and the SR300). The SDK allows depth and color streaming, and provides intrinsic and extrinsic calibration information. The library also offers synthetic streams (pointcloud, depth aligned to color and vise-versa), and a built-in support for record and playback of streaming sessions.

UVC (USB Video Class)

UVC cameras (USB video class) are USB-powered devices that incorporate a standard video streaming functionality – connecting seamlessly with the host machines.

UVC is supported by the Linux kernel and is natively available in most Linux distributions.

V4L

Video4Linux, V4L for short, is a collection of device drivers and an API for supporting realtime video capture on Linux systems.

V4L2 (Video4Linux2) is the second version of V4L.

Video4Linux2 is responsible for creating V4L2 device nodes aka a device file (/dev/videoX, /dev/vbiX and /dev/radioX) and tracking data from these nodes

v4l2-ctlis a V4L2 utility that can be used to configure video for Linux devices (installed as part of thev4l-utilspackage)v4l2-ctl --list-deviceslists the available video devices.v4l2-ctl --alllists all the controls for the video device.v4l2-ctl --device=/dev/video0 --list-formats-extlists the available video formats for the video device.v4l2-ctl --device=/dev/video0 --set-fmt-video=width=1920,height=1080 --verbosesets resolution and pixel format for the video device.v4l2-ctl --device=/dev/video0 --set-ctrl=focus_auto=0disables autofocusv4l2-ctl --device=/dev/video0 --set-ctrl=focus_absolute=<value>sets manual focus (value typically 0-255)

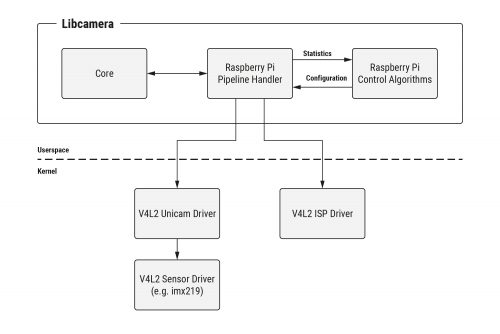

Libcamera

Libcamera is a cross-platform camera support library that provides a generic way to access and control camera devices. Libcamera is designed to be a camera stack that is agnostic to the underlying hardware and supports multiple camera devices. Comparing to V4L2, libcamera provides a higher level of abstraction and a more consistent API across different camera devices.

FFmpeg

FFmpeg is the leading multimedia framework, able to decode, encode, transcode, mux, demux, stream, filter and play pretty much anything that humans and machines have created.

Codecs

libx264is a free software library and application for encoding video streams into the H.264/MPEG-4 AVC format.h264_v4l2m2mis hardware accelerated H.264 encoder using V4L2 mem2mem API (for example, on Raspberry Pi)h264_nvencis NVIDIA GPU hardware accelerated H.264 encoder (nvenc means NVIDIA encoder)libvpx-vp9is a free software video codec developed by Google (VP9 codec). It is part of the WebM project. It is widely used in WebRTC and YouTube. Comparing to H.264, VP9 provides better compression and quality at the same bitrate.

Commands

ffmpegis a command-line tool that can be used to capture, convert, and stream audio and videoffmpeg -f v4l2 -i /dev/video0 -c:v libx264 -f flv rtmp://localhost/live/streamcaptures video from the V4L2 device (camera), encodes it using libx264 codec, and streams it to the RTMP serverffmpeg -codecslists all the codecs supported by FFmpegffmpeg -h encoder=libx264lists the options for the libx264 encoder

ffplayis a simple media player based on SDL and the FFmpeg librariesffplay -f v4l2 -i /dev/video0plays the video stream from the V4L2 deviceffplay video.mp4plays the video fileffplay rtmp://localhost/live/streamplays the video stream from the RTMP serverffplay -fflags nobuffer rtmp://localhost:1935/live/1234plays the video stream from the RTMP server with no buffering (for low latency)

ffprobeis a command-line tool that shows information (like codecs, bitrates, …) about multimedia streamsffprobe video.mp4provides information about the video fileffprobe /dev/video0provides information about the V4L2 device

GStreamer

GStreamer is a pipeline-based multimedia framework that links together a wide variety of media processing systems to complete complex workflows. GStreamer is a framework for creating streaming media applications. For instance, GStreamer can be used to build a system that reads files in one format, processes them, and exports them in another.

Note

GStreamer provides a more flexible and modular approach to multimedia processing. It allows developers to easily create custom pipelines and plug-in their own components. FFMPEG, on the other hand, focuses more on providing pre-built solutions and may have limited flexibility in terms of customization.

How to use CSI camera with Nvidia Jetson

Install

v4l-utilsfor working with V4L2 devices andv4l2loopback-dkmsfor creating virtual video devicesLoad the v4l2loopback module:

sudo modprobe v4l2loopback exclusive_caps=1which creates a virtual video deviceStream the video frm the CSI camera (nvarguscamerasrc) to the virtual video device:

gst-launch-1.0 nvarguscamerasrc sensor-mode=4 ! 'video/x-raw(memory:NVMM),width=1280,height=720,framerate=30/1' ! nvvidconv ! 'video/x-raw,format=I420' ! queue ! videoconvert ! 'video/x-raw,format=YUY2' ! v4l2sink device=/dev/video1View the video stream from the virtual video device:

ffplay /dev/video1or usecheeseapplication (is a simple webcam viewer for GNOME)

Note

If the GStreamer pipeline fails, try to restart the nvargus service: sudo systemctl restart nvargus-daemon.service

GST_DEBUG- environment variable can be used to debug GStreamer pipelines. For example, GST_DEBUG=3 gst-launch-1.0 ...

Useful Resources

List of 3D Sensors (depth cameras) for robotics applications

OpenMV Cams - Machine Vision with Python. Our goal at OpenMV is to make building machine vision applications on high-performance, low-power microcontrollers easy.